Showing posts from Image Recognition tag

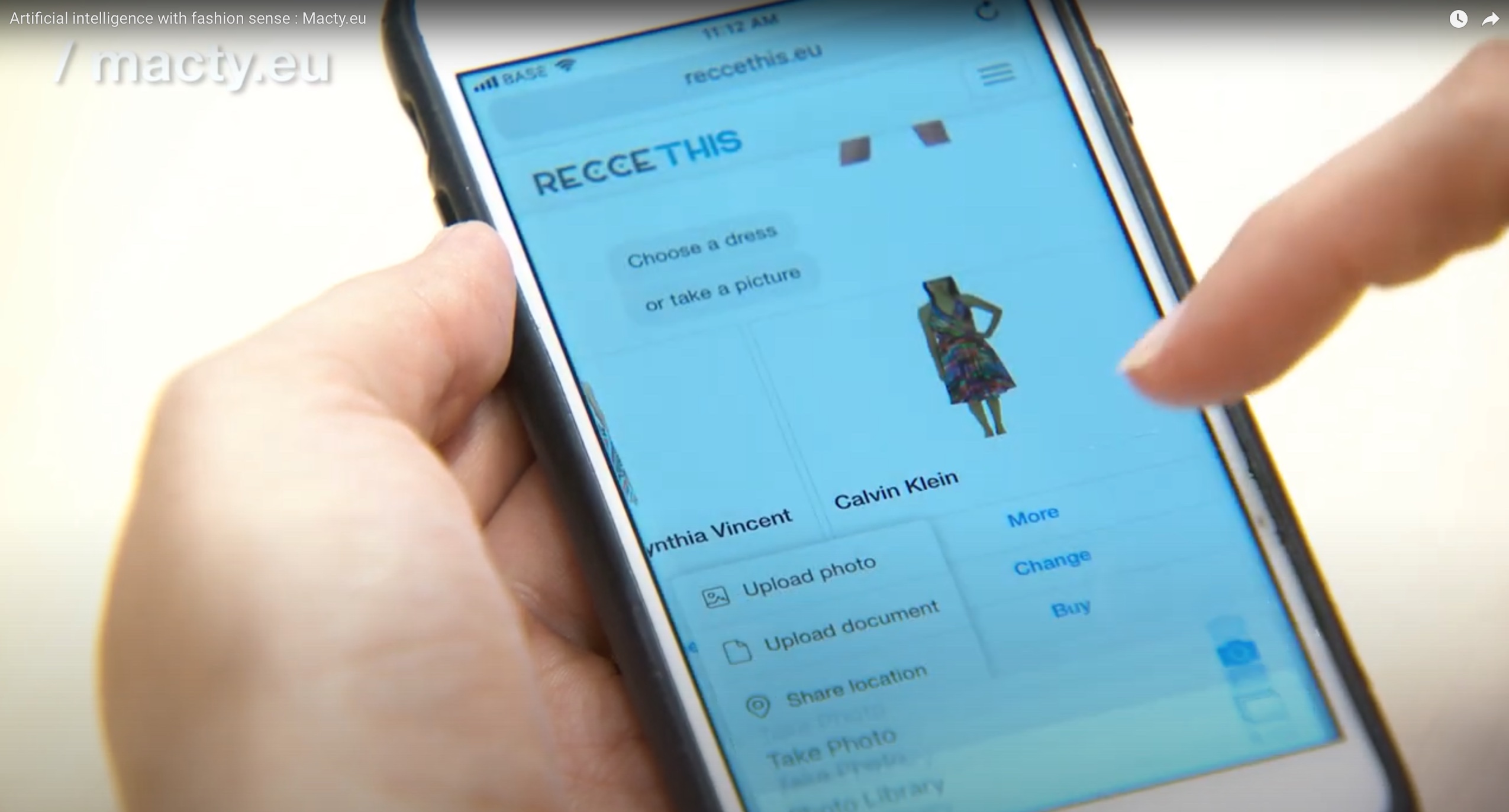

Get the Look on our Chatbot with Visual Search

Leveraging our image and pattern recognition technology, we developed a chatbot that enables users to search product collections using images. Additionally, users can search using both language and images together. For example, I like this item, but I want it with a longer skirt and a V-neck instead of high neck. See it in action here: Your browser does not support the video tag. From the video above, you can see you can perform the following actions: Upload a picture of something you like and …

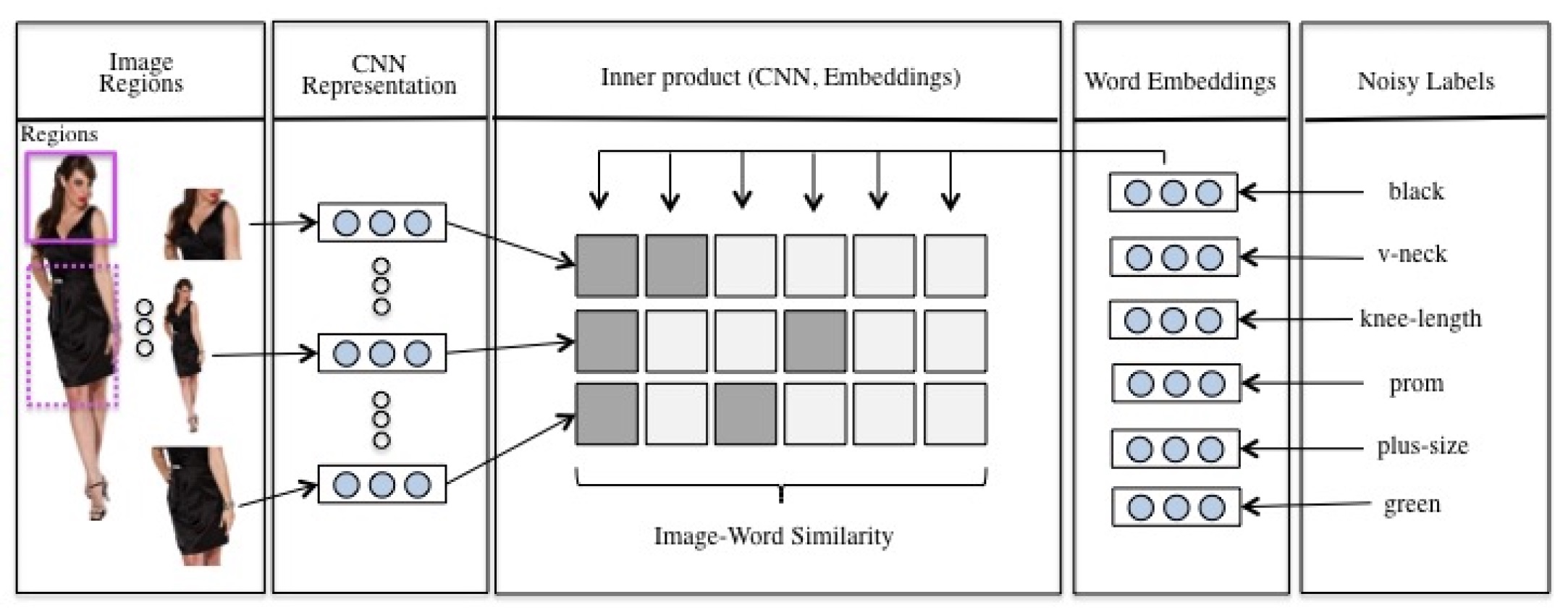

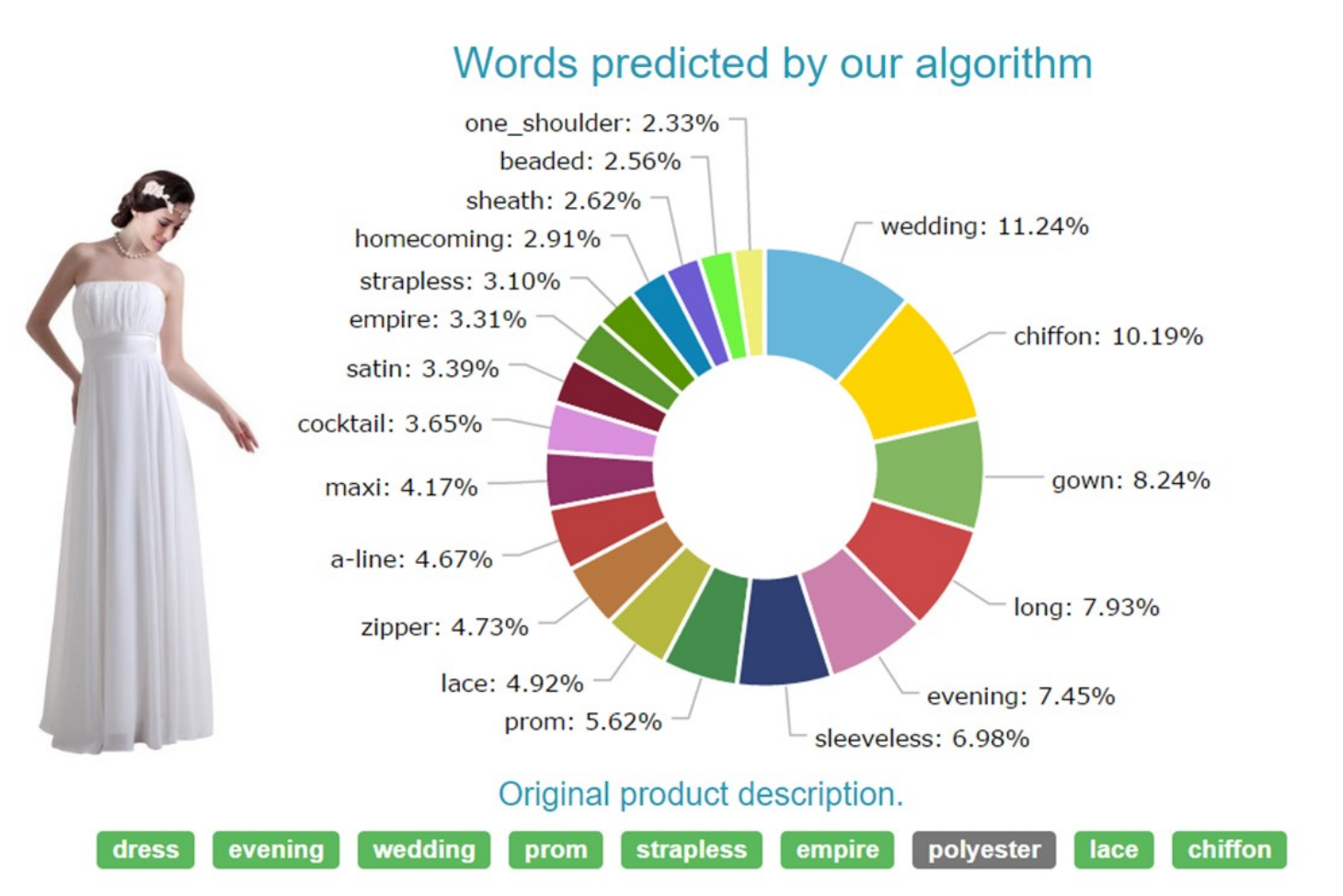

Cross-modal Search for Fashion Attributes

In this paper we develop a neural network which learns inter- modal representations for fashion attributes to be utilized in a cross-modal search tool. Our neural network learns from organic e-commerce data, which is characterized by clean image material, but noisy and incomplete product descrip- tions. First, we experiment with techniques to segment e- commerce images and their product descriptions into respec- tively image and text fragments denoting fashion attributes. Here, we propose a …

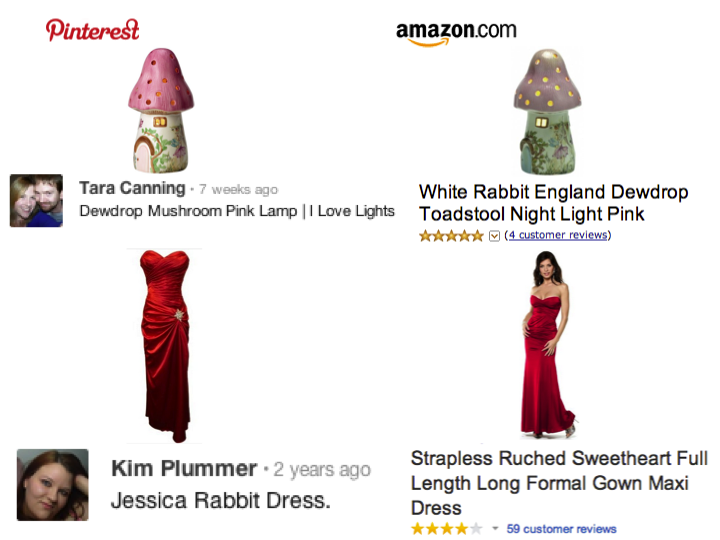

Latent Dirichlet Allocation for Linking User-Generated Content and e-Commerce Data

Automatic linking of online content improves navigation possibilities for end users. We focus on linking content generated by users to other relevant sites. In particular, we study the problem of linking information between different usages of the same language, e.g., colloquial and formal idioms or the language of consumers versus the language of sellers. The challenge is that the same items are described using very distinct vocabularies. As a case study, we investigate a new task of linking …

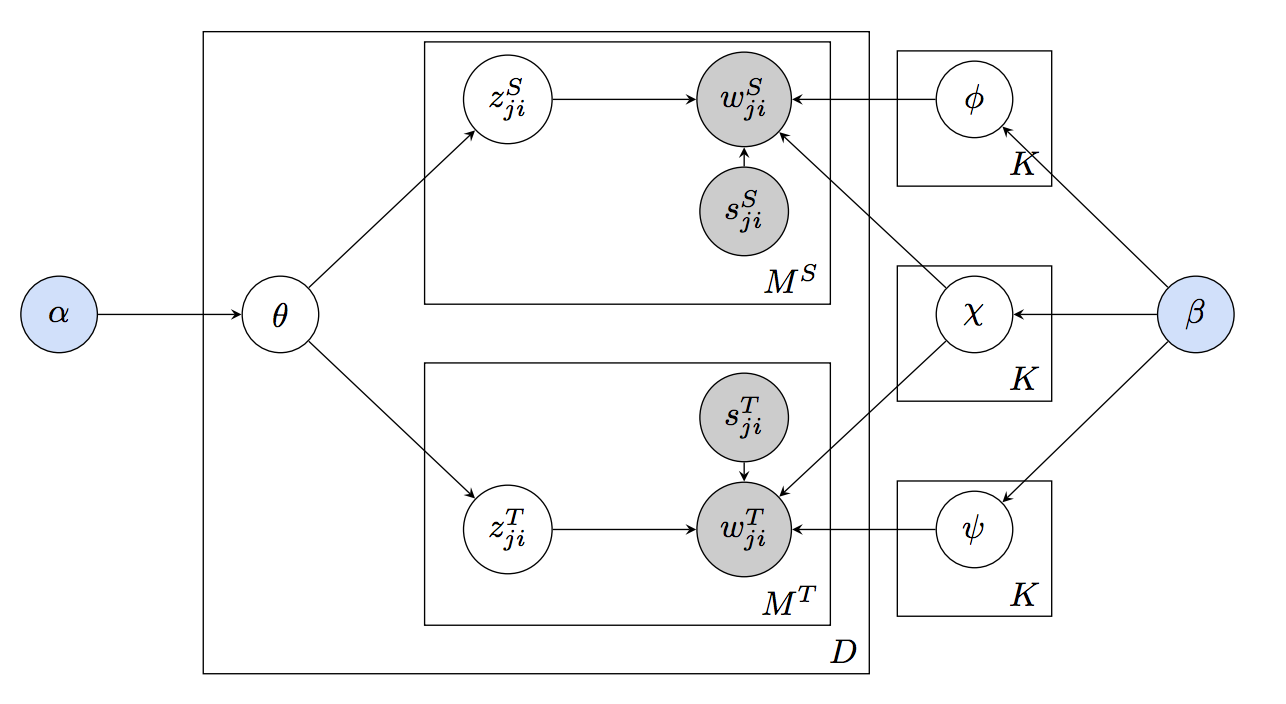

Learning to Bridge Colloquial and Formal Language Applied to Linking and Search of E-Commerce Data

We study the problem of linking information between different idiomatic usages of the same language, for example, colloquial and formal language. We propose a novel probabilistic topic model called multi-idiomatic LDA (MiLDA). Its modeling principles follow the intuition that certain words are shared between two idioms of the same language, while other words are non-shared. We demonstrate the ability of our model to learn relations between cross-idiomatic topics in a dataset containing product …

Inferring User Interests on Social Media From Text and Images

We propose to infer user interests on social media where multi-modal data (text, image etc.) exist. We leverage user-generated data from Pinterest.com as a natural expression of users’ interests. Our main contribution is exploiting a multi-modal space composed of images and text. This is a natural approach since humans express their interests with a combination of modalities. We performed experiments using the state-of-the-art image and textual representations, such as convolutional neural …

Cross-Modal Fashion Search

In this paper we show an online demo that allows bidrectional multimodal queries for garments. Check out our paper Cross-Modal Fashion Search In Lecture Notes in Computer Science (LNCS) Vol. 9517, pp 367-373, 2016 Susana Zoghbi, Geert Heyman, Juan Carlos Gomez, Sien Moens PDF